Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

› What makes something "notable"?

Wikipedia presents Node as a system designed for writing highly scalable Internet applications, notably web servers. Some more quotes found on the "trusted links" give additional clues:

It’s the latest in a long line of "Are you cool enough to use me?" programming languages, APIs, and toolkits. In that sense, it lands squarely in the tradition of Rails, and Ajax, and Hadoop, and even to some degree iPhone programming and HTML5.

Go to a big technical conference, and you’ll almost certainly find a few talks on Node, although most will fly far over the head of the common mortal programmer.

[In contrast, traditional] Web servers are based on a client/server execution model that's bound up with operating system threading models and have inherent limits in terms of scale.

Node makes a much smaller footprint. It allocates web server resources on an as-needed basis, not pre-allocating a large chunk of resources for each user. For example, Apache might assign 8MB to a user, while Node assigns 8KB.

Walmart executives made it clear that the benefit of using Node was far greater than the risk – an assertion many other large companies (and providers of Node support systems) have been waiting to hear from a household name like Walmart.

Wikipedia, not always known for its probity, lists "trusted sources" reporting that Microsoft embraced Node. O'Reilly published two books, The Register, Forbes, and Wired relay the hype and HP and DELL are cited as users.

Filling The blanks in the Story

First, let's have a look at how the English Dictionary defines "notability", the quality that Wikipedia requires to be listed:

"Notability: the quality that makes something worth paying attention to".

According to The Register, Node was two years old in 2011, so it has been first released in 2009 – just like G-WAN.

The Register further states:

"Matt Ranney and his team originally conceived Voxer as a two-way VoIP radio for the military, and he started coding in good old fashioned C++. 'That's what you use for a serious, high-performance military application,' he says.

But C++ proved too complex and too rigid for the project at hand, so he switched to Python, a higher-level language that famously drives services at the likes of Google, Yahoo!, and NASA. But Python proved too slow for a low-latency VoIP app, so he switched to Node.

Node moves V8 from the client to the server, letting developers build the back end of an application in much the same way they build a JavaScript front end.

In certain Silicon Valley circles, Node is known as The New PHP. Or The New Ruby on Rails. Or The New Black. It's The Next Big Thing, according to, well, just about everyone who uses it."

Let's review the seriousness of these "trusted sources":

One article claims that because C++ is said to be too complex, and Python is said to be too slow, the military have had to use Node. And that not only the U.S. Army will have to do so but also Google, Yahoo! and NASA.

This "journalist" also writes that PHP, Ruby and "Black" (whatever this is supposed to be – "Black Magic" or "Black Gold"?) are obsolete now Node is there – and that anyone using Node will call it "The Next Big Thing" (more on that later).

If you remove the hype, what information remains? "Node moves V8 from the client to the server." That is the only true statement. But that's rather difficult to sell as a marvel: Node is not alone doing that – and it might not be the best at doing it.

The Register alone has published 50 articles about Node. Forbes lists it a whooping 250 articles. Wired does it 80 times. That kind of servile PR coverage costs millions of dollars to get such a favorable appointed exposure – the very same corrupt Wikipedia way.

Let's sum up what makes Node so "notable":

- Highly-Scalable by-design – perfect for Web servers

- Cool like Rails, Ajax, Hadoop, the Apple iPhone and HTML5

- Cited in "big conferences" – that "mere mortals can't understand"

- Making old-fashioned OS threading an obsolete vestige of the past

- Much Smaller Footprint by sparingly allocating resources on-demand

- Walmart recognized that "the benefits using it greatly outdid the risks"

- It's "The Next Big Thing", according to, well, "just about everyone who uses it"... claims the Register.

The recurring "coolness" excepted, no ground is provided to support any of the bold technical claims. One thing in particular is worrying: the fact that conferences (normally aimed at explaining things) are supposed to be too technically challenging for software engineers to understand what the innovation is all about (the subliminal message is: don't try to go there, just trust the hype).

How to evaluate innovations? Use. So let's test "Node.js", also called "The Next Big Thing" by the most "Trusted Sources":

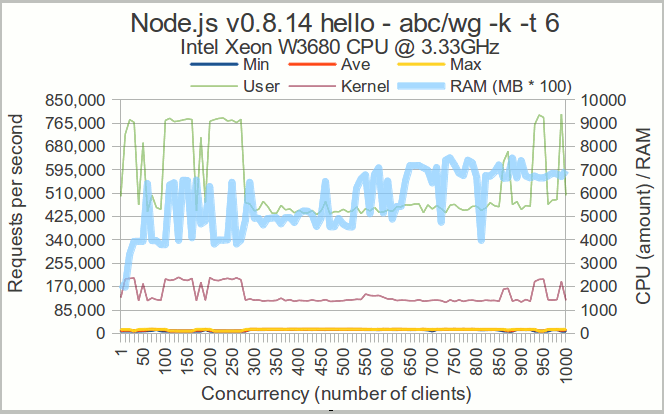

Node.js hello

If Node.js is "highly-scalable", that must be horizontally: at 15k RPS, Node.js does not fully use any of the 6 CPU Cores, other than to produce tons of heat.

Node.js' CPU & RAM usage is so high that the scale had to be resized from 6k to 10k. The chaotic curves do not give the feeling of anything seriously engineered.

The "much smaller footprint" Node.js claim is invisible. And the expected effects of the event-based architecture remain to be seen. As a server technology the fact is Node.js sucks, and very badly.

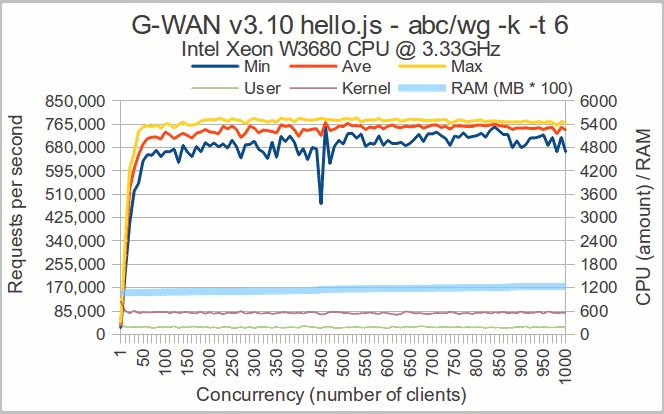

G-WAN hello.js

G-WAN runs Node.js as a script engine (which results in testing V8). The contrast with Node.js used as a server is shoking:

G-WAN delivers 788k RPS, handling 52x more requests in 47x less time (28 mins vs. 22h15). G-WAN needs 2,444x less servers than Node.js to run a mere Javascript hello world.

And I am not even talking of the RAM and CPU usage, where G-WAN outdoes the "revolutionary" Node.js allocations made on an "as-needed basis".

Well, seeing how Node.js poorly performs and scales – unlike The Register – we are not tempted to call it "The Next Big Thing".

But as Node.js, "The Next Big Thing", crawls so miserably, how to call G-WAN + Javascript which run 2,444x faster?

Since 2009 Wikipedia keeps calling G-WAN "not notable" despite the independent reliable sources that dozens of technically inferior Web servers lack... while enjoying the exposure that the Wikipedia editors that sell their services advertise as so valuable that it is "almost guaranteed to grant a top three Google hit".

Note that G-WAN should also be listed in Wikipedia's "Comparison of server-side JavaScript solutions" where the technically inept Node.js stands with more than 20 server solutions (most of which are not notable at all but listed here anyway).

And I am not even mentionning Wikipedia's "Comparison of application servers" where G-WAN deserves (clearly more than many others) an entry in the C++, Java, .NET, Objective-C, Python, Perl and PHP sections in addition to being in a position to create legitimate sections such as asm, ANSI C, D, Go, Javascript, Lua, Ruby and Scala.

The recurring deletion of the ANSI C section by "neutral editors"

reveals how unscrupulous this holly war is: ANSI C is 40-year old

and all the 'more modern' languages that deserve an entry in Wikipedia

are based on ANSI C. So much for the alleged love for "neutral" and

"relevant" information in the fake Temple of Knowledge. Other (dark) forces

are obviously at work – and they suffer no contradiction in Wickedpedia,

the heaven of full-time (appointed) liars Internet shills.

What is Shill Marketing?

Someone who works for a business but pretends not to in order to seem like a reliable source is a shill. Shill marketing is the act of using a shill to try and convince the public that a product is worth buying. Sometimes illegal, shill marketing is usually considered dishonest. If customers find out that they have been targeted with shill marketing, they often feel cheated.

The concept of shill marketing is simple. People tend to feel more comfortable with a product or service if they know someone else who has a good experience with it. If someone who isn't associated with the company tells you how good it is, the claim will probably be more convincing than if it came from the company spokesman.

A shill marketing worker is actually employed by the company, but pretends not to be. The shill marketer acts like a regular customer and tries to encourage people to buy the product. Often times in shill marketing, multiple shills work together, reinforcing each other's story and engaging in a conversation about how great the product is.

The Internet has become an ideal venue for shill marketing. Since the Internet is anonymous, shill marketing is much easier. One person can pretend to be several different customers in the same shill marketing setup. Chat rooms, message boards, and blogs are common stages for an Internet shill marketing campaign.

Employees engaged in a shill marketing setup register one or more accounts on an Internet service, a message board for example. Usually one of the shill marketers will ask an innocent sounding question. "I was interested in buying product X. Has anyone heard anything about it?" This is the classic shill marketing setup.

Another user, possibly the same person using a different account login, will answer the first question by praising the product. "Oh yeah, I started using product X a month ago and I love it. I use it all the time. It's the best product in the whole world!"

Shill marketers sometimes try to bring other members of the message board into the discussion, but the hook is already set. Anyone who isn't familiar with the shill marketing setup might believe that the endorsement is real, and not just a cheap trick. Obviously, not all product endorsements are shill marketing in disguise. If the setup seems too perfect, and the answer is quick and only offers rave reviews, it's possible that you're not seeing an actual testimonial, but shill marketing in action.

The practice is obviously leveraged when "reputable sources" (such as the mainstream media) join forces to echo the shills. The goal of all this hype is to "occupy the space" so there is no room left for other solutions – both in the landscape and in people's mind.

How sustainable is such a scam? Who benefits from excluding the competition? And, at which price? Here I am not only talking about the money used to pay the corrupt editors, I am referring to the cost for the public to be exposed to corporate propaganda presented as "neutral and informative" under the cover of a fake encyclopedia.

If the masses knew how much Wikipedia is based on corruption then they would probably not trust the contents of its articles to make strategic investments. That conflict of interest and the outrageous collusion made in the sole goal to deceive consumers sounds very much like the scandal of the rating agencies which noted poisonous investments as "totally safe".

This sick business model only survives because most authorities choose not to sanction any of the recurring abuses: no rating agency was punished in the U.S. nor in Europe (Australia did it once) for having published "opinion" in exchange of payments from the vendors – and Wikipedia is surfing on the same wave of total impunity granted by... lobbying (the polite word for corruption).

When you consider the damage that these (duly appointed) lies have caused to all by creating a global economic crisis then you can measure how well protected the fraudsters are. And how much high circles value the practice of deception.

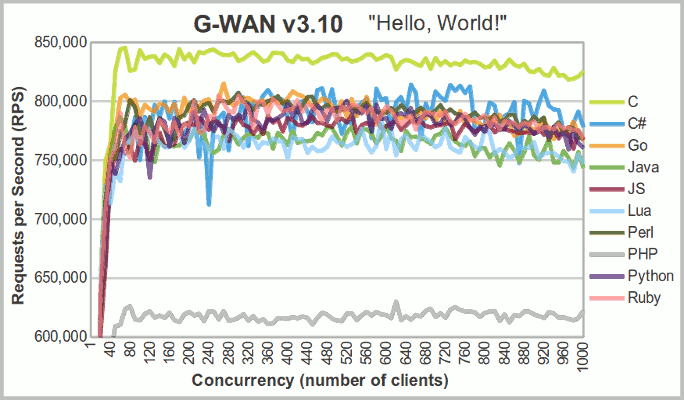

Just in case Javascript could be accidental, Python (said to be "much slower than Node") flies at 797k RPS with G-WAN:

G-WAN offers scripts in 15 programming languages. They all run their own runtime (rather than a subset re-implemented in the Java or .NET virtual machines).

And all of those languages fly (several orders of magnitude) higher and faster with G-WAN than with any other solution available on the market.

G-WAN makes asm C C++ C# D Go Java Javascript Lua Objective-C Perl PHP Python Ruby and Scala fly higher than the proprietary frameworks written by the language vendors themselves.

G-WAN + Ruby run at 804k RPS. That's higher than any other Ruby framework, and just a bit slower than G-WAN + Perl.

G-WAN + Go run at 814k RPS (see how much better this is than the native Go server tested on the same machine).

G-WAN + Perl run at 807k RPS. PHP is lagging 'behind' but with the help of its vendor PHP could probably fly too. Never before a server allowed PHP to run at 630k RPS.

PHP started in 1995 – way before multicore CPUs (2004). But Google Go was born in 2009 (like G-WAN) and it provides goroutines to help with parallel programming and concurrency. This language has real qualities, but like with many other programming languages targeting the Web, one critical feature is still missing: a decent server.

Google Go, Node.js, Nginx (and dozens of asthmatic Web servers that completely lack "trusted sources") are all listed on Wikipedia. But Wikipedia will not let in the only server able to make all Web apps fly. Demonstrating its "neutrality", Wikipedia uses robots to automatically delete any direct or indirect reference made to G-WAN – whether this is done by U.S. or European users.

Those dynamic scripted languages reputed to be "slow" are more than usable in high-performance environments with G-WAN. It is quite notable that G-WAN makes them run faster than pre-compiled and statically-linked nginx C modules.

Nginx, first released in 2004, fuels many app. servers as it is considered as one of the fastest servers. But on the same machine for the tests above, Nginx is much slower at serving static contents than G-WAN at serving dynamic contents.

That's because G-WAN uses the CPU Cores introduced in 2004 that the web server vendors do not want to use. Why Wikipedia and mainstream media are imposing this blackout? The only reason that makes sense is that they need to make sure that nobody will know that there is a way out of the multicore trap.

For servers (a) sold by reputable vendors, (b) well-funded, and (c) which employ rocket-scientists, this is disturbing to see all of them reject the idea of doing better.

After all, since the 2000s, governments fund research to tackle the "Parallel Programming challenge" considered as the "single most important problem facing the global IT industry" by the European Union, Intel and Microsoft.

Most app. servers don't support 15 programming languages, so why G-WAN should be the only one to do it?

- apps can instantly be used and understood by billions of heterogeneous users – all sharing the same user-friendly platform

- companies can split projects in tasks using different languages in one single product (user-interface, database, critical code)

- serving many languages was the only way to show that something is broken in this (supposedly) highly-competitive market.

By supporting 15 programming languages, G-WAN (a) lets legacy applications to scale and perform on modern CPU architectures, (b) makes it far easier to experiment and use several languages in a same Web site or Web application, and (c) allows an unprecedent number of developers of various backgrounds and technical abilities to harness the parallel processing power of the ever increasing number of cores available on each chip.

Who else lets you do that?

- Not Google App. Engine (using Java-based Python/Scala/Quercus-PHP and Go).

- Not Microsoft Azure (using .NET-based C#/Java/PHP/Python and Node.JS tested above).

- Nor Joyent, the official sponsor of Node.js which we have seen failing on all its claims (including "coolness": lies are not cool).

- Nor PHP frameworks, as we have seen recently.

- Nor C# stacks, also benchmarked.

- Nor Java app. servers, whoever claims to "do a better job".

- Nor Scala, previously tested, despite the interest of heavyweights like Intel.

Wait a minute, isn't Node.js' event-based I/O aimed at cutting the iddle server states when querying slow databases? What about that? How G-WAN deals with this problem?

Very well, thank you: no less than 2 weeks ago, G-WAN presented an online Game Demo at the ORACLE Open World (visited by 45,000 I.T. professionals) where 100 millions of avatars were moving in real time and where people could join the game to play along with the avatars.

Here, G-WAN was accelerating ORACLE noSQL (a distributed Java database)

on one single 6-Core desktop machine.

Can you do that with Node.js?

So, who knows better?

G-WAN being so much better than all others since 2009, how can Wikipedia, The Register, Wired, and Forbes (among hundreds of other "trusted sources") only talk about sloppy technology? How comes that the hundreds of billions of dollars invested in Universities and R&D Centers at IBM, Intel or Microsoft only produce bad products?

That's because Wikipedia, the "Trusted Media" and the Corporate ecosystem are all devoted to making the promotion of "reputable" players: the ones that claim to perform and scale but without actually doing it.

And they exclude challengers to protect their reserved garden of savantly engineered illusions because abusing people boosts sales.

Why only mediocre technologies would be promoted?

A revered player in finance and business liked to repeat at every occasion: "Competition is a deadly sin."

In the (very) upper floors, competition is seen as "bad" because, by disrupting incumbents, it reduces the revenue of all the (major) market players – as well as the income of the cheerleaders ecosystem that makes a living at eating the crumbles left by the giants.

How can it be the case for software?

Node.js is 'free' software. Anyone can use it without paying a dime. With the proper amount of PR, many people will use it – just because it occupies the place in the mind of people who have been formatted to blindly believe what is written by the "trusted sources". And, as Node.js is remarkably inefficient, its users will have to:

- buy consulting and books in the hope to understand how to use the pig properly

- invest time, money and energy in sterile directions, diverting them from what works

- buy more hardware and electricity when they face the dire reality: pigs don't fly.

That is, for the time people will be able to run their own infrastructure because we will soon be obliged to "embrace the Cloud" when electricity bills will raise while income falls.

That may help to understand why Microsoft, HP and DELL are so enthusiast about Node.js: the so-called "Next Big Thing" imposes more Software licenses, Cloud services, hardware, consulting and maintenance than normally needed.

So, what makes bad execution and so desirable for 'the markets' is the fact that there is no better cash-cow than widely distributed inefficiency, preferably endorsed by "reputable sources" to convince the public that deceptive products are worth buying: look, those respectable "independent experts" (paid by us but you will know too late, if ever) gave it a triple-A.

In contrast, efficient technology is a threat to the easy gains loved by the markets because disruptive innovations will easily kill bloated and inefficient product lines – that is, if they are permitted to venture in the so-called "free and open markets" locked-up by the cartel members and their armies of servants.

Think about the electric car: zero-maintenance, no planned obsolescence, no tow trucks, no replacement parts, no transmission, no gearbox, no hose, no clutch... no Oil – "The Black Gold" as it is called (most probably for a reason).

Clever users will even be able to generate their own electricity! Where is the only possible lock-in factor? Batteries – hence the war for establishing (proprietary) "standards" for batteries and charging stations before electric cars are widely deployed.

But electric cars are now coming to the frontlines because the supply of Oil will be problematic in the 'close' future (like the availability of Nuclear fuel, by the way).

Despite being more reliable, faster and cleaner than Oil cars, Electric cars have been killed 200 years ago for this sole reason: the very sad lack for corporations of any way to lock-in end-users. In contrast, the opportunities offered by Oil (a rare fossil resource which can be confiscated in a limited number of places on Earth) are a monopolist's dream (made true).

The same (illegal) cartel agreements are seen everywhere in other industries like infrastructure, transportation, telecoms, building, food, consumer goods, waste-recycling, pharma, energy and finance (to cite only a few).

The largest companies are at war. Their holly mission is to eradicate anything that threatens the (juicy) business model created by a savant mix of chaos and inefficiency. People, for example, are more profitable when they are sick. So let's make them eat junk food, drink and breath poison, get toxic meds to keep them sick – and therefore profitable – generation after generation.

Like for all wars, there are collateral damages: the pollution, over-consumption, corruption of the political elites, of the government institutions, of the educational system, of the media – all that comes at a price – and the bill is discreetly passed on to the taxpayer (who may not have to wait too long now to feel the bite in his daily life).

The only allowed "innovation: is in the area of turning consumers into even more efficient cash-cows. Again and again. Preferably for life through rents (insurances, telecoms, water supply, energy, "research", etc.) that offer unescapable recurring billing.

For example, by planning the haves and the have nots (water supply or droughts, harvests or famine, and on-demand weather disasters), geoengineering can reshape the geopolitical landscape and concentrate wealth even further, letting the well-connected 'play God' in the catastrophe reinsurance and weather derivative markets (the latter was created by... Enron).

Every single time, the method behind their 'success' is the same: create a new unescapable problem and provide the lifetime 'cure' to save people – at a cost – and with the intent to detach people from how systems work in reality:

| problem artificially created | planned solution for captive users |

|---|---|

| Pointless Complexity in Software Products | Fees for Consulting, Trainning, Books, etc. |

| Pointless Bloat and Inefficiency in Software Products | Sell many more machines than needed to do the job |

| Multicore CPUs imposing parallel Programming Skills | Lifetime subscriptions to the Cloud for everyone |

Quoting a Turing Award and ACM Award recipient:

What I find truly baffling are manuals – hundreds of pages long – that accompany software applications, programming languages, and operating systems. Unmistakably, they signal both a contorted design that lacks clear concepts and an intent to hook customers.

A programmer's competence should be judged by the ability to find simple solutions, certainly not by productivity measured in 'number of lines ejected per day'. Prolific programmers contribute to certain disaster.

Reducing complexity and size must be the goal in every step – in system specifications, design, and in detailed programming.

Every single project was primarily a learning experiment. One learns best by inventing. Only by actually doing a development project can I gain enough familiarity with the intrinsic difficulties and enough confidence that the inherent details can be mastered.

We have demonstrated that flexible and powerful systems can be built with substantially fewer resources in less time than usual. The plague of software explosion is not a 'law of nature'. It is avoidable, and it is the software engineer's task to curtail it."

In the I.T. industry, software can (and is) pirated. But hardware can't. And this is why 'free' software (products that would be pirated if they were not free) as well as commercial software (which is pirated) have been transformed into a way to sell more hardware (than needed) to unsuspecting consumers rocked back and forth by the sweet jingles of the media and advertising industries.

The next step is the Cloud, where people will have no choice but to pay all their life because, missing decent servers, the public will be unable to "harness the parallel processing power of the ever increasing number of cores available on each chip", a challenge depicted by the European Union as "the single most important problem facing the global IT industry".

Welcome to the Matrix

Such reasoning is invariably ridiculed as "the theory of complot". Yet, as history has shown, this is neither a "theory" nor a "complot": just have a look at the proceedings of the U.S. DoJ vs Philip Morris, AT&T, Associated Press, Glaxco, or Standard Oil.

You are not a "paranoid" when you refer to existing threats that have been well documented during centuries on all continents.

And I am sparing the I.T. industry in that list only to make it clear that establishing illegal cartels is quite a widespread practice.

As deleting any true competition lets all (cheating) players raise their revenues by (secretly) agreeing on higher prices, there's no reason to build an evil plan against mankind: individual greed, desire of recognition (or fear to be excluded from the game for some, and fired for many) is a strong enough motive for most of us to follow the master plan.

This explains why mediocrity is (naturally) rewarded by an ecosystem that benefits from inefficiency. And why efficiency is (naturally) condemned as it threatens the availability of the so precious artificially created or unresolved problems that create the chaos and pain that drive the sales of "the (lifetime) cure".

This inversion of values is at the core of the problem.

Innovators are often denigrated as "insane" by scare mongering (see F.U.D.). They may indeed be "dangerous", but that's only for those among their competitors who fake to deliver any real technical value. There is certainly no "danger" for end-users to finally have access to the real thing instead of to the illusions published everywhere (note how discording voices are scarce).

If that sounds like a fiction, well, maybe this is because it is precisely the Great Art of Altering the Perception of the masses, something that the I.T. industry once called "Evangelism", "Promotion", or "Marketing" in its unperfect infancy:

"The Matrix is everywhere. It is all around us. You can see it when you look out your window or when you turn on your television. You can feel it when you pay your taxes.

It is the world that has been pulled over your eyes to blind you from the truth. That you are a slave, Neo. Like everyone else you were born into bondage. Born into a prison that you cannot smell or taste or touch. A prison for your mind.

The Matrix is a system, Neo. That system is our enemy. People are still a part of that system, and that makes them our enemy. You have to understand, most of these people are not ready to be unplugged. And many of them are so inured, so hopelessly dependent on the system, that they will fight to protect it."

The Matrix did not come from nowhere: it has been engineered. And it is sustained by scientifically spread illusions, by the public being castrated in high-schools, disinformed and hypnotized by the media, and by how many among us are dependent of the crumbles generously left to feed the slaves.

Here is its weakness: as power is now accelerating its concentration, less and less servants will be needed. Feeding dead fat is not profitable so they are fired. And past the initial trauma they will try to find a way out of the system that rejected them, by building value elsewhere.

And if you wonder how much potential there is in such an exercise then just look at history gain: each economic crisis revealed new champions able to disrupt entranched but lazy incumbents profiting from end-users unable then to keep acting in total contempt for reality.

History repeats itself. Empires end at the top of their power, because they have lost the ability to be relevant, the way G-WAN is: by helping people to do (much) more work with (much) less resources.

Complexity kills. It sucks the life out of developers, it makes products difficult to plan, build and test, it introduces security challenges, and it causes end-user and administrator frustration.

If you think good architecture is expensive, try bad architecture.

How did we reach that sorry state of things?

Big companies are notoriously bad at providing customer service. Not only because they are big and communication within the company is more difficult, but also (and mainly) because, at the top of things, the only question considered as relevant is profitability.

Profitability is not bad in itself, but it is a poor adviser as the dominant planning tool:

Would you feed your kids according to profitability? Probably no. But many people will feed your kids with this sole idea in mind.

And the frontier is slim between considering your kids as pigs (for the record, pigs are often fed with industrial waste which is so toxic that one must pay to recycle it). Whether you like the idea or not, your kids are likely to eat those toxic pigs routinely. Other livestock and human-grown fishes are not more lucky.

So, finally, your kids eat toxic industrial waste because someone looking at the financial facet of things decided that this is a clever way to make more money (industrial fat makes pigs grow faster and they take drugs to stay alive until they have the right size).

IT is a less extreme case, but the logic is the same: why pay for top-notch support engineers in your country while you can hire foreigners that will take the calls for 10% of the costs? After all, why quality of service should matter? Those customers getting support have already paid – and so is the brain-damaged logic behind the sloppy strategic choices.

The same goes with any other activity like product development, or justice proceedings. If you can use lobbying, why stay honest when you can be dishonest and stay profitable – even in the very unlikely cases when you have to face a fair trial?

What we are all facing is a morality crisis. A damaging policy dictated by the top and imposed to the lower floors. And while it is clear that this will end in tears the upper floors battle to do business as usual because, in the short-term, they benefit from the system.

Lord, Make Me Pure But Not Yet

Don't their have kids? Or, can't they love their own kids more than money? How far such a behavior falls into the area of a dangerous anti-social pathology? If out-of-control people are punished for driving too fast, why not for poisoning kids? Profitability should not be the excuse for a license to do everything.

These are the kind of questions that we will have to deal with in the future, when the system will be so broken that it will not go any further without a bit of relevance to sustain it.