Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

› Were Knuth, Thompson, Ritchie and Kernighan so "Wrong"?

You might have seen Poul-Henning Kamp (PHK)'s rants about Association for Computing Machinery (ACM) Turing Award Recipients:

- "You're Doing It Wrong" ................................. says PHK to Donald Knuth

- "The Most Expensive One-byte Mistake" .... says PHK to Dennis Ritchie

- "The Software Industry IS the Problem" .......says PHK to Kenneth Thompson

Published on the ACM Queue, Slashdot, Reddit, Wikipedia and hundreds of blogs, PHK's papers get very little criticism (if any). Does it mean that PHK is right to write that Knuth – whose books are widely recognized as the definitive description of classical computer science – as well as the authors of C and Unix, have just "done it wrong" all their life?

Does it mean that PHK knows so much better than the very best that Computer Science will be revolutioned by a new genius?

Educating the Masses

PHK explains that, after he was sollicited, to educate his unfortunate elders (and the rest of us), he has created Varnish, a thing that he calls a "Web server accelerator":

"I have spent many years working on the FreeBSD kernel, and only rarely did I venture into userland programming, but when I had occation to do so, I invariably found that people programmed like it was still 1975. So when I was approached about the Varnish project I wasn't really interested until I realized that this would be a good opportunity to try to put some of all my knowledge of how hardware and kernels work to good use". PHK, "Notes from the Architect"

At least the world had something tangible that could be used to see how things can be "done right". The "I rarely venture into userland programming" probably means that, unjustly, his feats remained unattributed (and, thank God, Varnish filled this gap).

Unlike others who make it easy to duplicate tests (disclosing the client, server, OS and hardware specs) Varnish is fighting hard to prevent people from making comparisons (resorting to censorship when all the rest failed). Could PHK really be that modest?

Since the Varnish "Web server accelerator" did not make comparative benchmarks publicly available, Nicolas Bonvin, an Academic expert of the EPFL's Distributed Information Systems Laboratory asked Varnish's help for such a test.

And, sadly, Varnish, the "Web server accelerator", was slower than Nginx – the Web server supposed to be "accelerated".

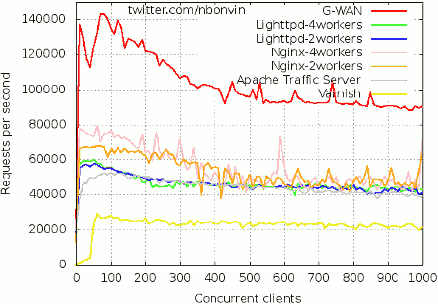

Nicolas' test was done with ApacheBench (a single-thread client) to compare 5 servers (see left chart) on a modest i3 CPU laptop.

As PHK was apoplectic: while(1) "you are doing it wrong"; Nicolas followed the many options that the Varnish team instructed him to use, one after another, with the same calamitous results.

After this, a Varnish employee in total denial posted F.U.D. on dozens of blogs to explain how badly this benchmark was designed. The fact that Varnish was involved in this series of tests – and the fact that Varnish refused to design and publish another test – wave any remaining doubt about the merits of those critics.

Industry standards

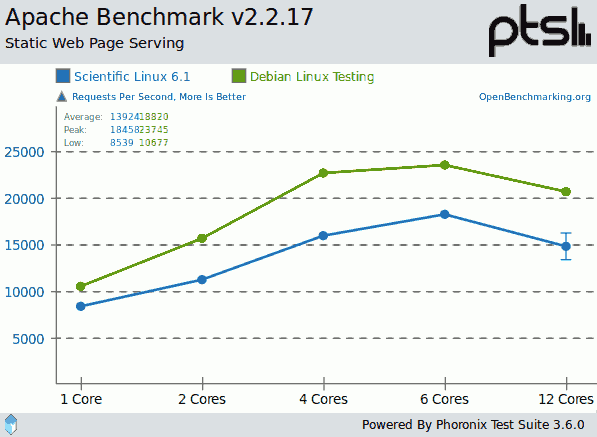

This same test despised by Varnish is the one used by the Phoronix Test Suite as the industry's official reference for the Linux, OpenSolaris, Apple Mac OS X, Microsoft Windows, and BSD operating systems (see below the official IBM Apache test made by Phoronix on Linux):

"This is a test of AB, which is the Apache benchmark program. This test profile measures how many requests per second a given system can sustain when carrying out 700,000 requests with 100 requests being carried out concurrently."

The ab.c test used by Nicolas Bonvin was significantly better: using 1,000,000 AB requests (instead of 700,000) on a [1 - 1,000] concurrency range (instead of with concurrency 100 only).

So, the furious (and clueless) Varnish blog post was more motivated by the poor results of its server than by the procedure followed by Nicolas.

A school case of Fear, Uncertainty and Doubt.

Some (others?) claimed a faster server is "not needed". Why then make Varnish, a product sold as an "acceleration server"?

Web Applications (CMS, AJAX, Comet, SaaS, Clouds, Grids, Trading, Auctions, online Games) live and die by latency (so serving many more requests per second is a plus). And a Web server able to do the same job on many less machines is reducing costs.

Since some vendors seem to take ample liberties with the most basic hard facts, the appreciation of whether or not efficiency is a desirable feature should rather be left to end-users.

Vertical Scalability

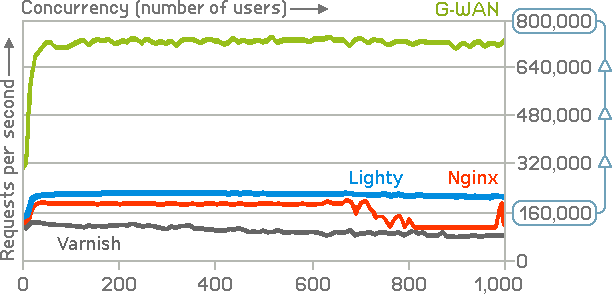

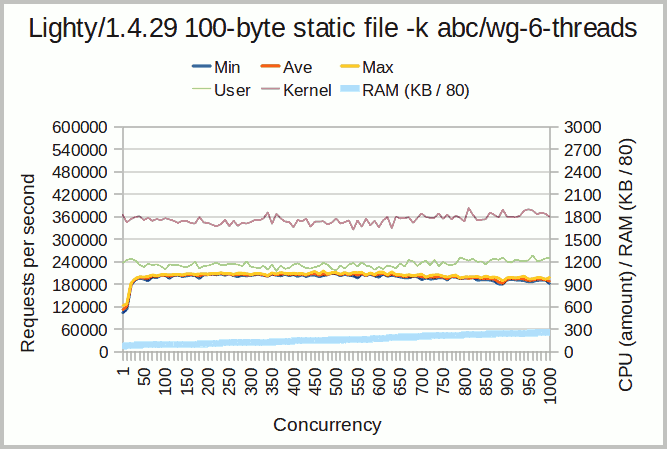

In Nicolas' test, Nginx and Lighty's performance is similar with 2 or 4 workers. More recent tests using weighttp, a multi-thread client, instead of the single-threaded ApacheBench (see below), have confirmed that Nginx and Lighty do not scale on multi-Core systems. This is the CPU architecture since 2004.

Despite this, the Nginx and Lighty Web servers are twice as fast as Varnish, the auto-proclaimed "Web server accelerator".

That's the program that PHK claims to have designed with: "all my knowledge of how hardware and kernels work" before adding: "Welcome to Varnish, a 2006 architecture program".

It is not clear if PHK has ever heard about multi-Core CPUs – or if he just does not understand their purpose – but one thing is certain: PHK knows very little about performance coding.

Finally, Ken Thompson, Dennis Ritchie and Brian Kernighan were not the total idiots depicted by PHK: PHK's difficulties with C and Unix seem to be due to his own (now crystal-clear) limitations rather to someone else's fault.

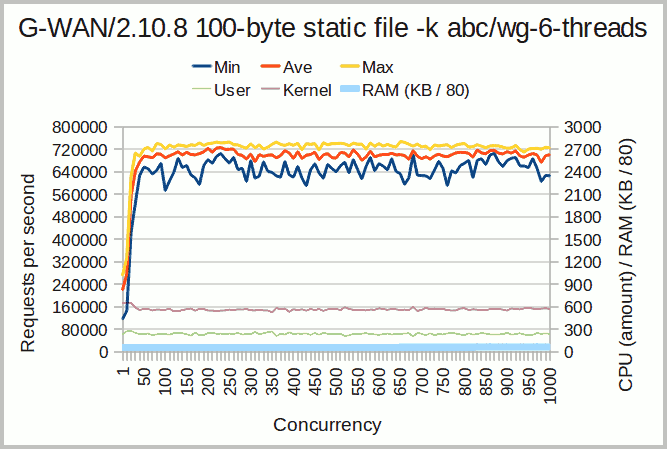

More recent versions of those servers confirm Nicolas' test, with an even wider gap between Varnish and G-WAN (thanks to a 6-Core CPU and the use of weighttp, a multi-thread client able to fully address the potential of SMP servers):

Following a similar slope, Igor Sysoev, Nginx's author, claimed that Nginx looked slower than G-WAN only because of Nginx's handlers.

Like PHK, Igor declined to make a test to support his argument, so Nginx was recompiled without handlers and re-tested.

And, without handlers, Nginx was only 2-5% faster than with its default handlers. G-WAN supports more features than Nginx but it has never been stripped-down for any test.

Contrary to the recurring accusations posted all around, Nicolas Bonvin has never been related to G-WAN (neither before, during or after the tests). We first learned about him when he asked a Varnish comparative test, and later again when he published several series of results. The Varnish's Ministry of Propaganda was not long to react – in its usual subtle and constructive way.

Each time G-WAN is mentioned as an alternative to Varnish or Nginx, some voices hijack the discussion about G-WAN by discussing G-WAN's author personality (a subject that they claim to master despite being total strangers to him).

When this is taking spiraling proportions, always groundless and only used to invite people to stay away from one's work, then one must feel concerned because this is an obvious fear-campaign conducted by a competitor who feels weak in the technical area.

Whether or not these clueless rants can be called "science" or "technology" is left as an exercise for the reader. But too much ambition with too little capabilities – coupled with a natural inclination for denying reality – are a bit short to access the Pantheon of legendary coders. In contrast, tangible results need little explanations: they stand by themselves.

Performance, Scalability and RAM/CPU Usage

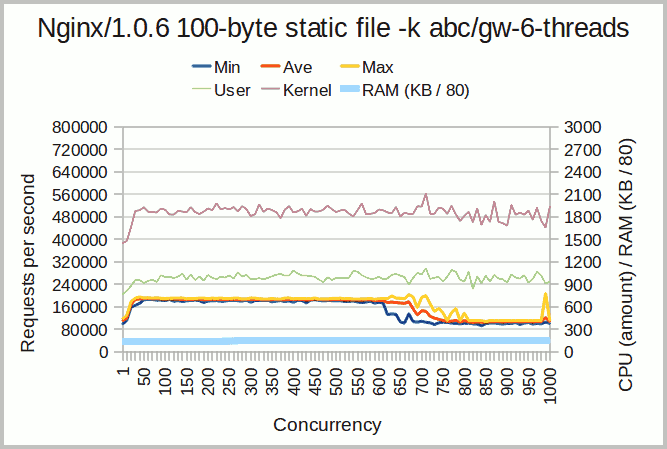

The performance, CPU and memory resources usage break-down can be found below for Nginx, Lighttpd, Varnish and G-WAN:

Note Nginx being twice as fast as Varnish:

Nginx Chart Interpretation

Nginx has a high but stable kernel and user-mode CPU usage, as well as slow growing memory use.

This constant CPU usage overhead prevents Nginx from scaling vertically as concurrency is growing.

The cause of this CPU usage comes from both the Nginx architecture and implementation which are clearly sub-optimal (hence the final peak near 1,000 concurrent users when the test finishes).

Note Lighty being cleaner and faster than Nginx and Varnish:

Lighty Chart Interpretation

Lighty has similar CPU levels as Nginx, with a bit more overhead in user-mode, maybe related to the higher memory usage as concurreny is growing.

But Lighty sustains a more stable vertical scalability than Nginx in high concurrencies and achieves slightly better performance on all the concurrency range.

Like for Nginx, the level of consumed user-mode CPU resources is hitting the kernel which has a hard time to reach its potential because the user-mode server application's code is monopolising too much critical resources.

Note Varnish's asthmatic speed and gargantuan appetite for RAM and CPU usage:

Varnish Chart Interpretation

Varnish has a widly growing memory usage (the memory usage represented here is not the reserved Virtual Memory, that's the Resident Set Size (RSS), the memory really in use).

Varnish is also the only server which has a larger user-mode CPU overhead than the kernel-mode CPU overhead.

This unbalanced CPU usage is revealing a disastrous implementation, which is reflected by the poorest performance of the servers tested here.

Note G-WAN being 5-10x faster than Nginx, Lighttpd and Varnish while using (far) less CPU and RAM resources:

G-WAN Chart Interpretation

G-WAN's scalability is following an horizontal Requests per Second (RPS) slope correlated with a very low and constant user-mode/kernel CPU usage.

G-WAN also scales and performs 5-10x better than the other servers tested here.

G-WAN's memory usage is also the lowest, despite G-WAN processing up to ten times more requests per second than the other servers.

The performance curves' variability is due to the kernel being under more pressure to achieve a much lower latency.

G-WAN v2.10.8 served 3 to 7x more requests in 3 to 6x less time than the other servers:

Server Avg RPS RAM CPU Time for test ------ ------- --------- --------- ------------------------- Nginx 167,977 11.93 MB 2,910,713 01:53:43 = 6,823 seconds Lighty 218,974 20.12 MB 2,772,122 01:09:08 = 4,748 seconds Varnish 103,996 223.86 MB 4,242,802 03:00:17 = 10,817 seconds G-WAN 729,307 5.03 MB 911,338 00:28:40 = 1,720 seconds

And despite being the fastest, G-WAN used 1 to 4x less CPU as well as 2 to 45x less RAM resources (note for Varnish: that's the allocated memory amounts measured here, not the reserved virtual memory address space which does not deprive the system from any hardware resource).

When merely used as a static-content Web server, G-WAN (an application server) is 9 to 42x faster and uses much less CPU/RAM resources than all other servers ("Web server accelerators" like Varnish or mere Web servers like Nginx).

Guess how much wider the gap will be with 64-Core CPUs.

The same goes when G-WAN is used as a caching reverse-proxy – the first one based on a 'wait-free' Key-Value store. On the top of being faster, G-WAN is also immensely easier to use: it does its marvels without any configuration file (no risk of errors, no learning curve).

But G-WAN is also an application server which supports Java, C, C++, D, and Objective-C scripts with a rich API and the ability to use any third-party library with just a: #pragma link. That's a bit more difficult than merely serving static contents.

Further, G-WAN (edit & play) scripts are much faster (and easier to write) than Nginx (statically compiled and linked) modules.

Fair Play

By contrast with those sterile games, Lighttpd's main contributor (one of the server tested with Varnish, Nginx and G-WAN), did not find any reason to raise an objection. He just said that he would be very curious to have a look at G-WAN's source code:

G-WAN has this very nice key value storage feature using wait-free algorithms. I would LOVE to see that implementation, this is top-notch stuff. Very few people are capable of correctly coding such a thing.

Conclusion

If you have something to lose at using bad-performing solutions, then do test before you buy. That will cost you less than trusting the guys that have to bash others to promote themselves – without ever providing ground for their claims:

Tricks and treachery are the practice of fools, that don't have brains enough to be honest.