Theory vs. Practice

Diagnosis is not the end, but the beginning of practice.

How to Choose a Multicore CPU in 2025 – and How Much it will Matter

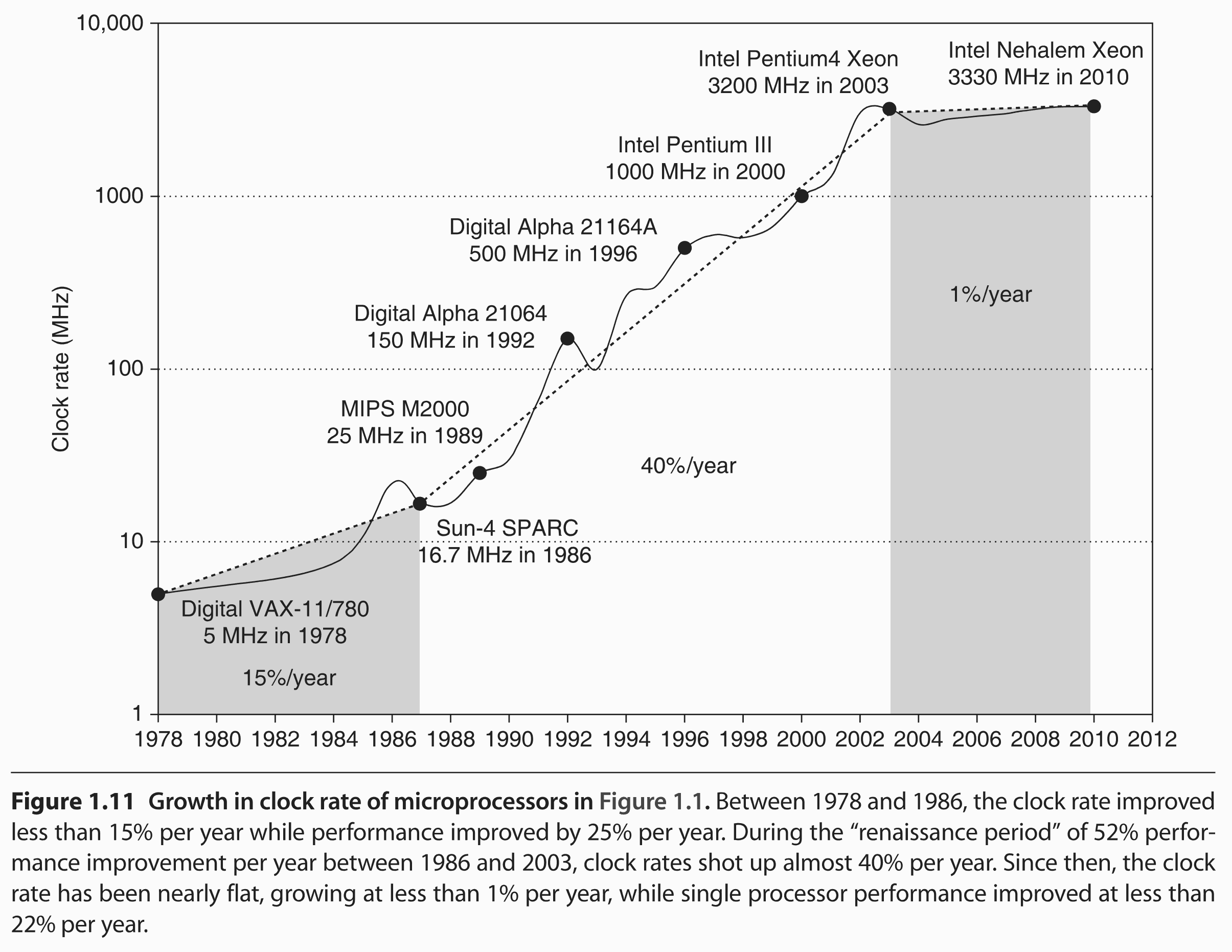

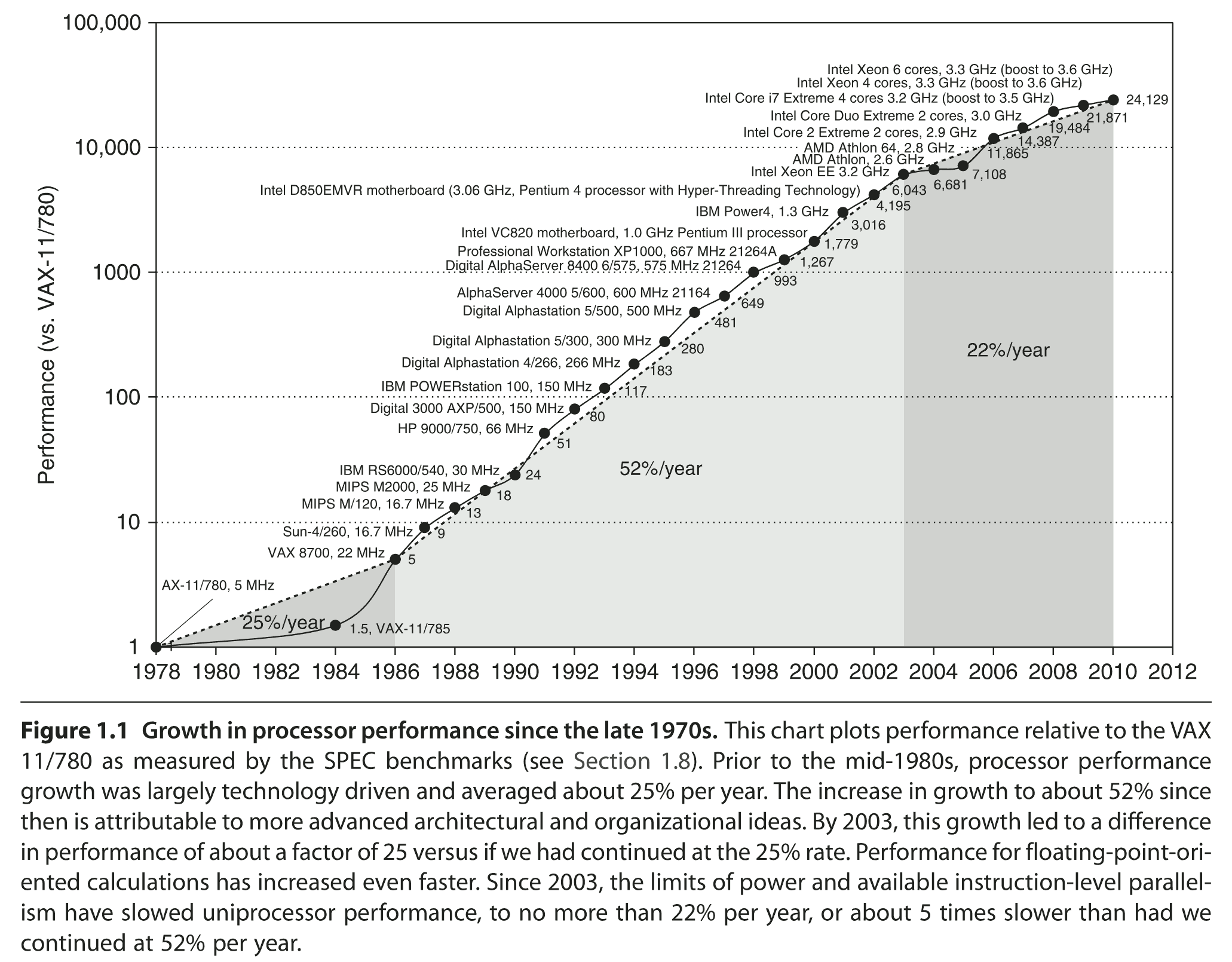

When I was young, CPUs were single-core, and the CPU frequency was an indicator of its speed. As the frequency was doubling every 2 years, the code execution was also doubling (sometimes a bit more thanks to new CPU instructions). The faster the CPU frequency, the better – things were simple:

After year 2001 (and the CPU frequency halt), things became more difficult because CPU vendors started to market multicore CPUs under names that were no longer related to their capacity.

Today, larger reference numbers do not necessarily imply better performance: for example, there are Intel i5 or i7 CPUs that are faster than some i9 or Xeon CPUs.

CPU frequency remains a relevant criteria – but since CPU frequencies no longer grow beyond the 5-6 GHz limit there are other important metrics to consider.

Higher clock speeds imply higher energy consumption, that's true.

But CPU "governors" (powersave vs. performance) and a user-defined variable clock-speed range (from 800 MHz to 5500 MHz for my i9 CPU) let you decide how much your CPU will consume (so a larger range is more desirable).

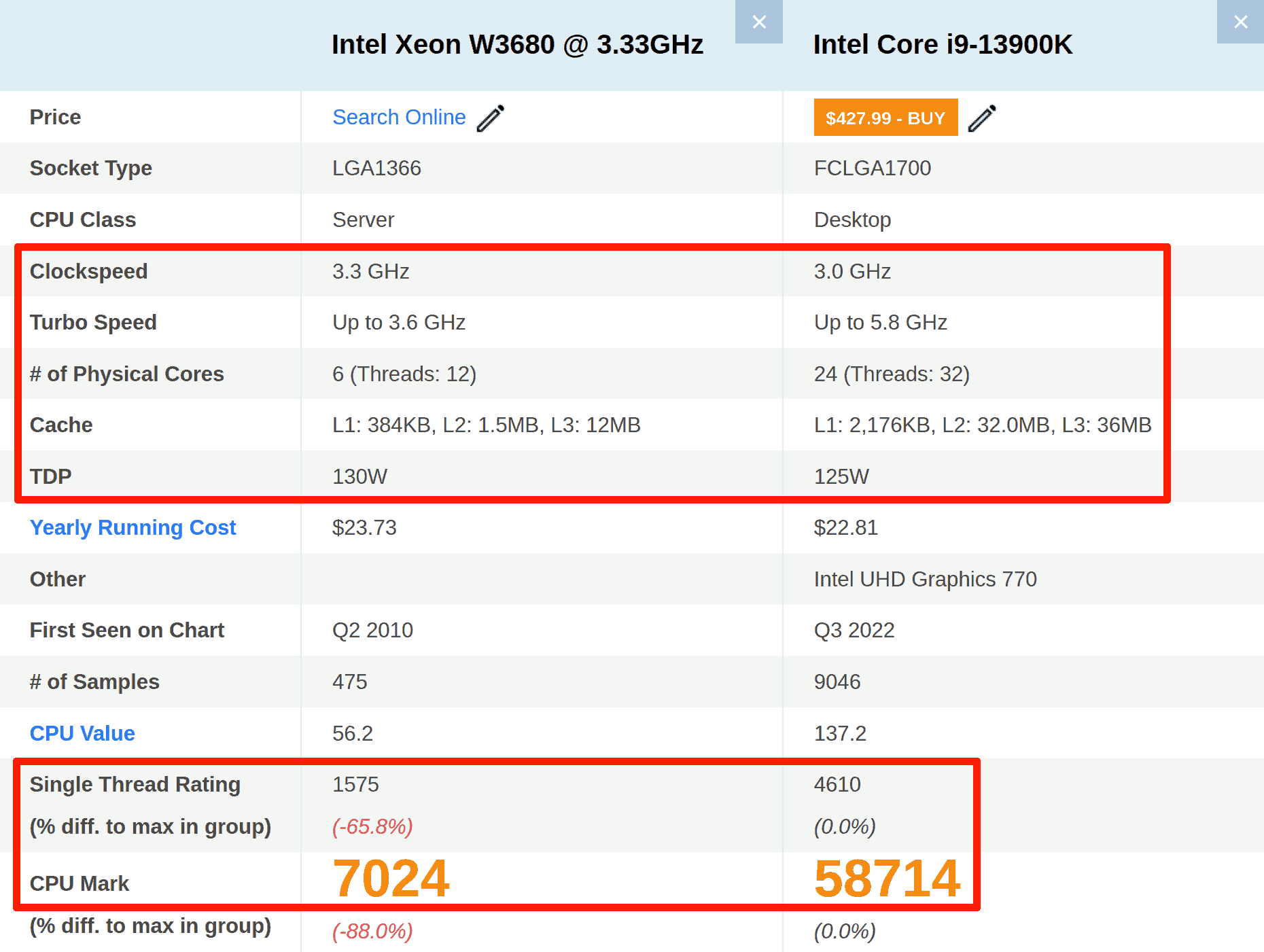

In 2009-2024, G-WAN was benchmarked on the 2008 Intel Xeon 6-Core that tops the charts on the left.

Purchased in 2024, my 2022 Intel Core i9 is 8.36 times faster overall, and its processing speed per Core (or thread) is 2.92 times higher than for the 2008 Xeon.

So the problem that everyone is facing is... how to navigate the hundreds of CPU references to pick the CPU you need?

Is it better to have many Cores – or to have less CPU Cores and a higher CPU frequency? Are there other criterias to take into account?

For Desktop machines, this maze is further obscured by the fact that PC vendors may forcibly add a (high-margin) graphic card... that is redundant with your CPU features (my i9 CPU embeds an "Intel Corporation Raptor Lake-S GT1 [UHD Graphics 770]").

Unless you need a GPU (my i9 runs 3D video games effortlessly), a PCI 3D graphic card is pointlessly consuming a lot of power.

We will resolve these questions, explain what are the gains, and how to reduce your purchase and operating costs... showing also how a server "status" page may help.

Why Bother?

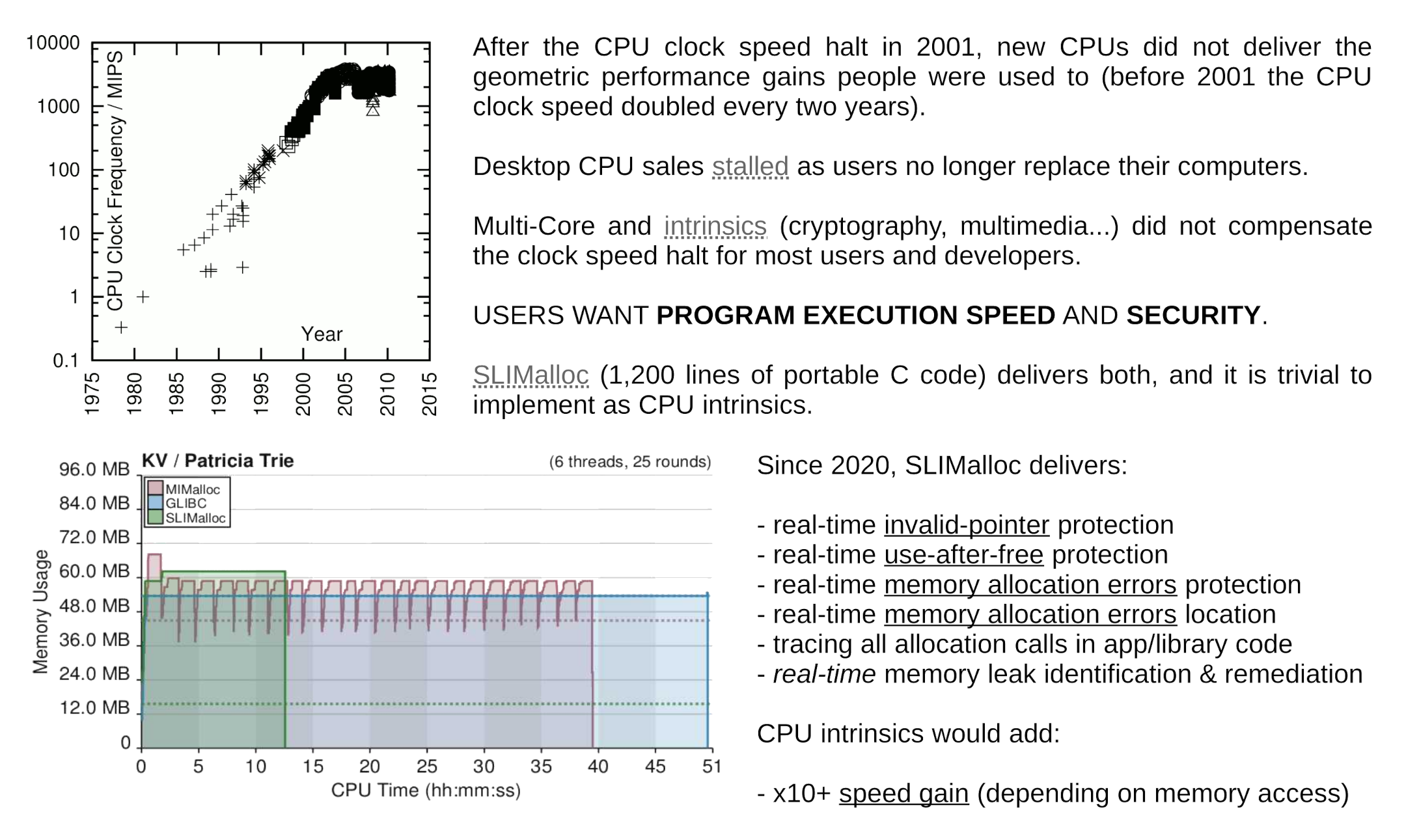

Well, it's all about costs – a fast, many-Core CPU can save you many computers – that is, if your software is able to exploit parallelism. As the chart below illustrates it, in 2020, the GLibC memory allocator and Microsoft Research Mimalloc scaled poorly as compared to TWD's SLIMalloc (used by G-WAN v15+):

A popular (yet inefficient) alternative to scalable software is to use Virtual Machines.

Virtual Machines let you split a computer into several 'virtual' computers... but this feature comes at a cost (a 3-5 times slow-down as compared to bare-metal hardware).

If the top-speed of a car is 25 mph, a 20 mph street-limit will slow-down this car by 5 mph.

If the top-speed of a car is 250 mph, a 20 mph street-limit will slow-down this car by 230 mph.

That's why many servers claim that Virtual Machines have a "negligible impact" on performance. This is only true with their slow server. Also, hosted servers using Virtual Machines don't let you setup the CPU and Linux kernel options (aggravating the performance losses).

So, any time you can, select software that scales on multicore systems: it will do the job much faster, on many less computers.

Thanks to SLIMalloc, on the top of adding memory-safety, G-WAN removed the GLibC bottleneck related to memory-allocation.

And, since G-WAN (1) is self-contained (statically-linked) and (2) does its own irrevocable sandboxing (its code and servlets cannot exit the /gwan folder), it is already isolating itself from any other program running on the same system.

Since G-WAN starts with a 500-700 KB memory footprint (the "RSS", Resident Set Size), you can run many, many G-WAN instances that are secure because they are completely isolated from each-other (and from the system).

Under higher load, G-WAN allocates more memory – and it frees it once the traffic goes down (here a short excerpt of the G-WAN "status" page for one of the 6 instances that have been running for a week, along other network applications, on the same 2017, Passmark/8138, 4-Core Intel Xeon E3-1230 v6 @ 3.50GHz CPU bare-metal server... serving static and dynamic contents):

- server uptime .... 00.00.07 04:03:39 - traffic total .... in:54.4 MB, out:7.2 GB - traffic daily .... in:7.8 MB, out:994.4 MB - traffic today .... in:3.9 MB, out:512.1 MB - VM address-space . 3'694'592 bytes (3.5 MB) - RSS physical RAM . 851'968 bytes (832.0 KB) - RSS peak ......... 7'200'768 bytes (6.8 MB) - pages reclaims ... 172'220'416 bytes (164.2 MB) - RAM free/total ... 91% of 15.2 GB - Disk free/total .. 99% of 417.8 GB - request time ..... 52 µs [27-106] |

A server lacking such a page is like a car without speed, RPM, oil and fuel gauges: you don't know how long you can keep going (on the accelerator pedal, and on the road)... without breaking things, or see the car stop (out of fuel). To evaluate the situation at first glance, you need as much information as available – all on a single page (so that you don't need dozens of clicks and/or commands to get a clue). Parsable information allows cluster/WAN consolidation and statistics. Get server health history by logging the "status" page daily (or more often if needed). It's trivial to do that with G-WAN – without 3rd-party tools. |

Above, we see crucial information for server health status and server provisioning:

- VM address-space . is the current Virtual Memory address-space consumed by this G-WAN process - RSS physical RAM . is the current, real-time total G-WAN process RAM consumption - RSS peak ......... is the maximum past total G-WAN process RAM consumption - pages reclaims ... is how much memory G-WAN has allocated and released since it was startedOf course, with such a low RAM and CPU consumption, Vitual Machines can be used with G-WAN, but they no longer serve any purpose (they just slow down G-WAN, consume more memory than necessary, and inject more vulnerabilities than already present in the OS).

How to pick the best CPUs? (larger caches, highest frequency, largest number of Cores)

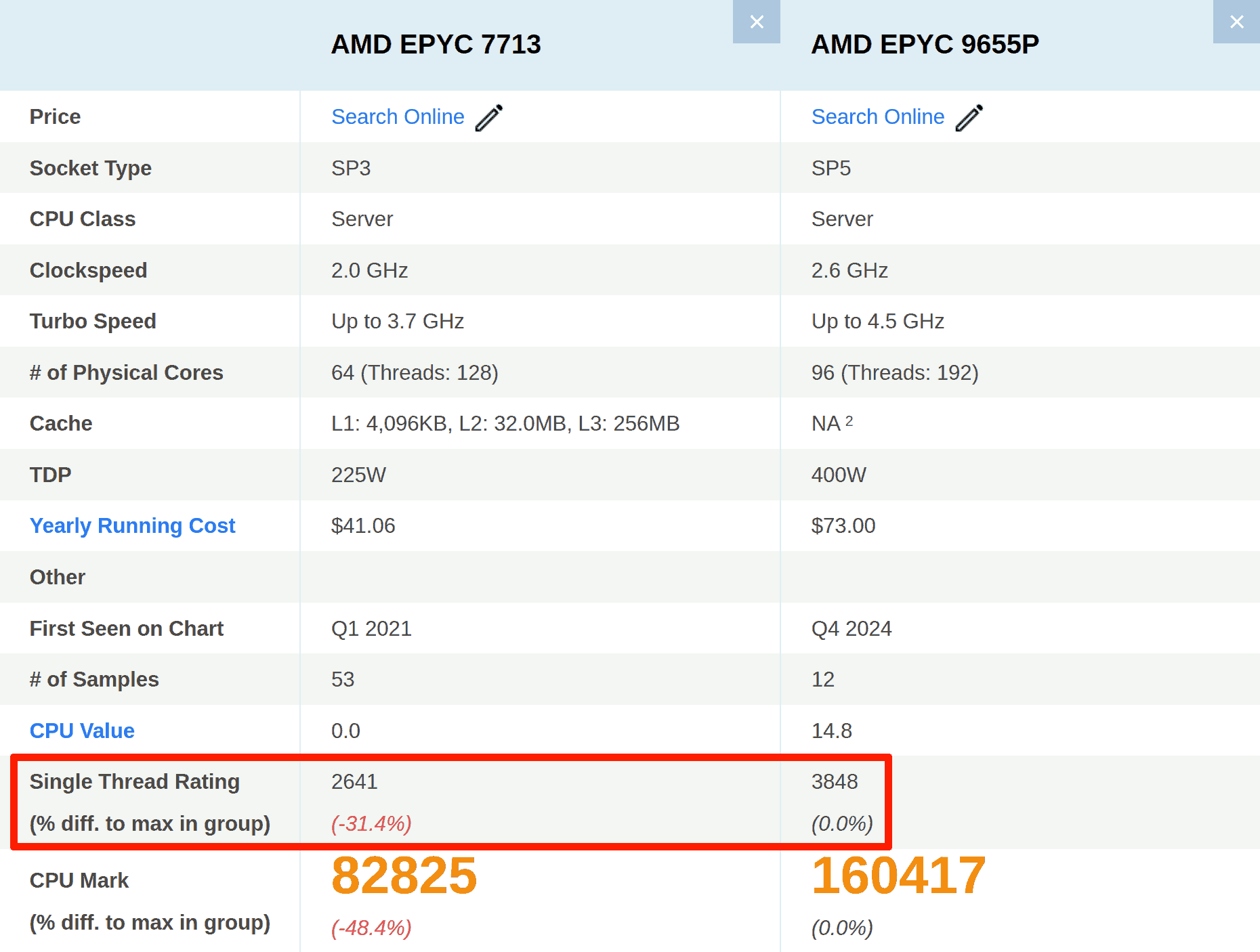

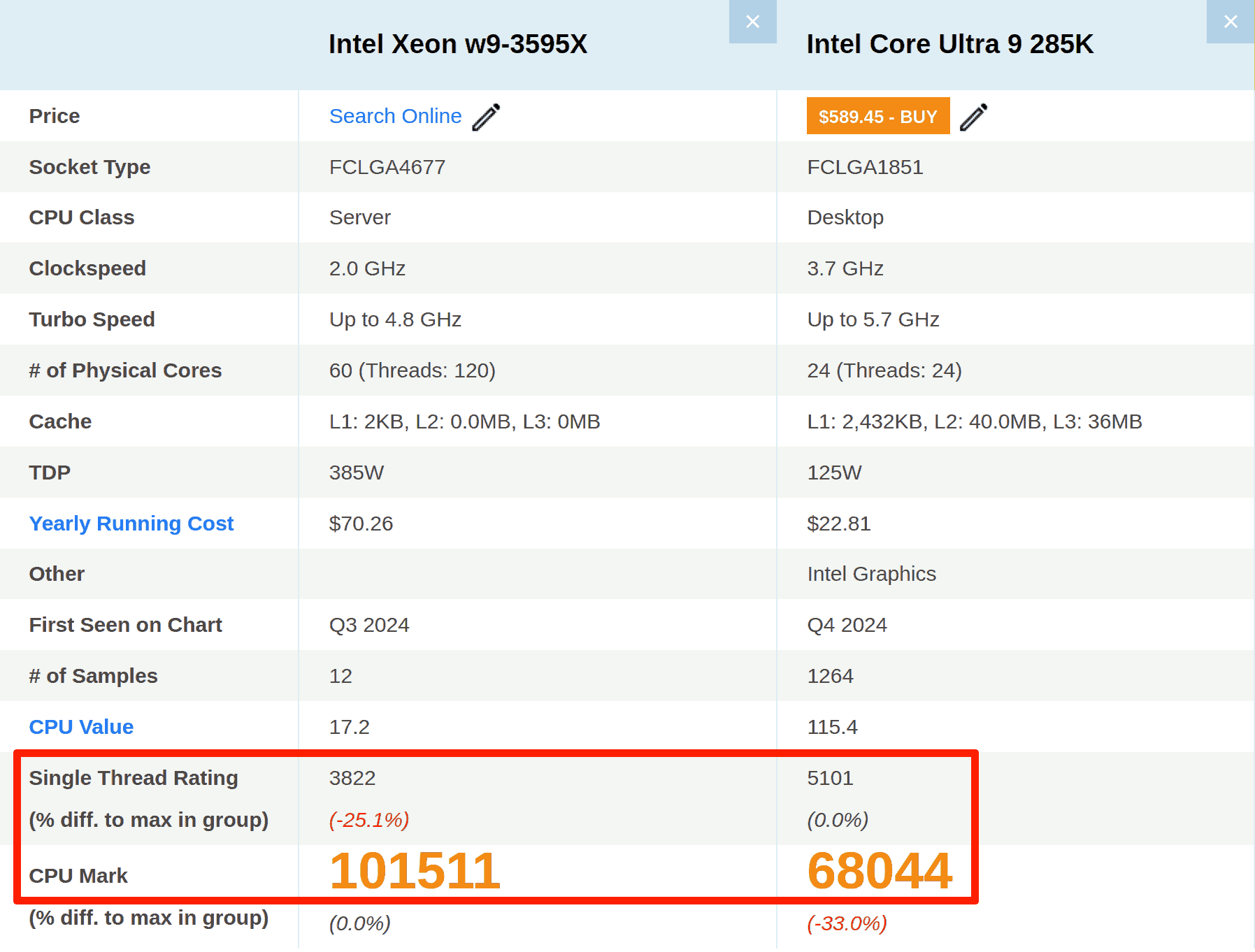

I have used the 'Passmark' CPU benchmark for almost 2 decades because it is very reliably reflecting the real performance of CPUs. There are many lists, for Desktop machines, low-consumption devices, servers – so you don't have to waste hours to find what you are looking for.

For example, here are the CPU I have used for benchmarks from 2008 to 2024 and the one used since 2024:

Everything (clock speed, number of Cores, cache sizes, energy consumption) has improved... but the most game-changing criterias for me are the single-thread rating (2.92 times better!) and, of course, the overall score (8.36 times better – due to the x4 number of Cores).

Before 2001, a factor four in single-thread performance was reached in 4 years! Here, we had to wait from 2008 to 2022 (16 years!) to almost get a 3x performance boost. This is why, IMHO, you should not miss such an opportunity when it becomes available.

I repeat: the single-thread rating defines the speed at which your code will run (faster or slower). So, for me, that's the most important metric (the number of Cores matters, but having many SLOW Cores is pointless).

- Here is a list of the most powerful CPUs (some have very high prices).

- This list is for more reasonable prices.

- Today, the top (with Intel CPUs at a reasonable price) is the 14th generation.

You have to consult these lists because the CPU reference names can be close – yet have very different capacities:

Since most software programs do not scale on multicore, it is more rewarding to have a higher passmark "single-thread" score than more (slower) CPU Cores.

And, with programs that scale on multicore systems (like G-WAN), target the highest number of Cores and the highest passmark "single-thread" score (this results in the highest passmark overall score).

If you have many machines, the promise of energy savings may invite you to pick lower CPU frequencies – but that's an error because you will just waste energy by needing many more machines than necessary (that is, if you are using software that scales on multicore, like G-WAN).

How CPU specs impact program execution – and how code quality has an impact

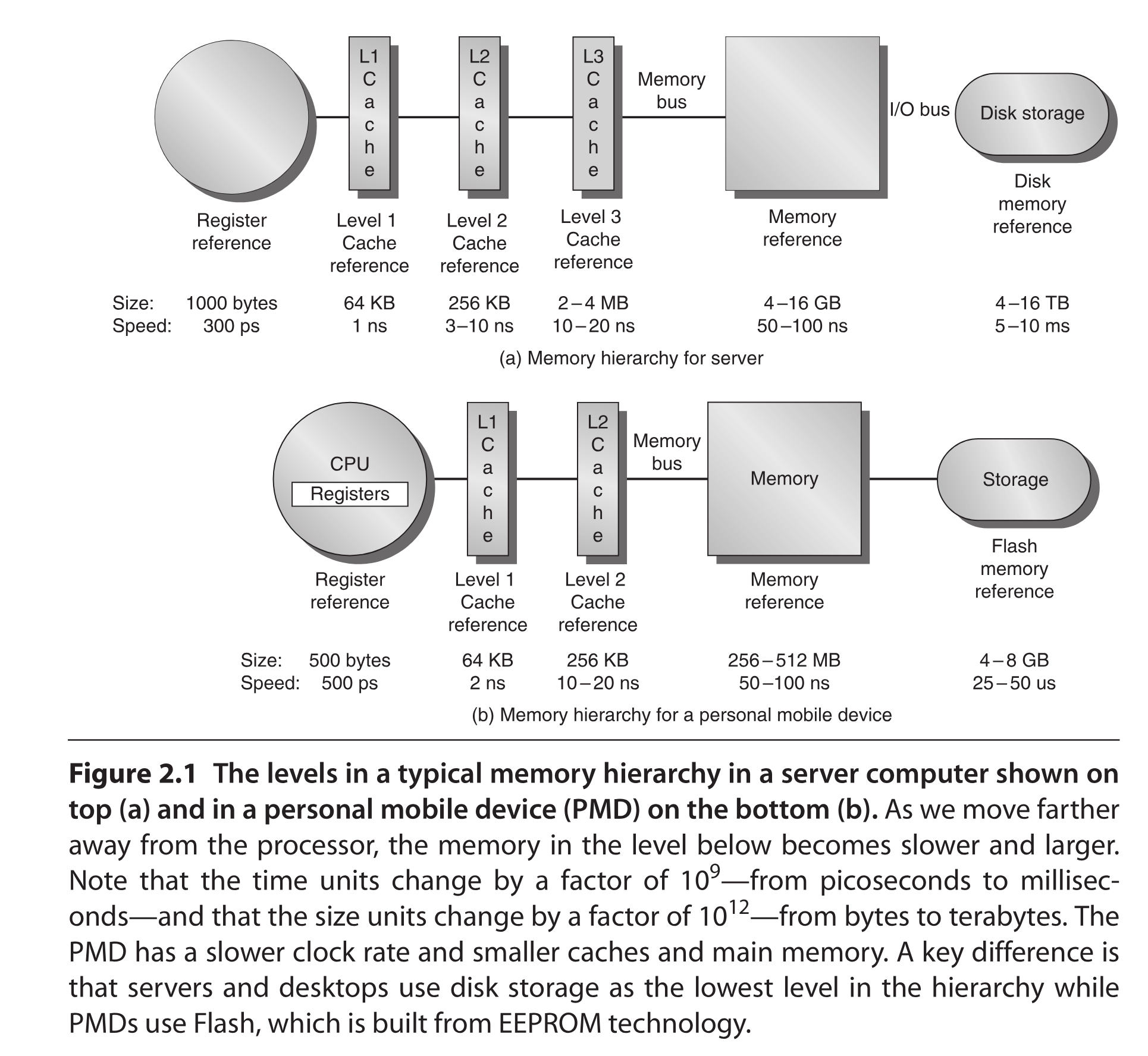

The reasons of this multicore 'tax' (inability for programs to scale) is easier to visualize with a schema of the hardware memory layout of a computer (see how much faster data access is when made from the CPU caches, as compared to system memory, or, even worse, disks):

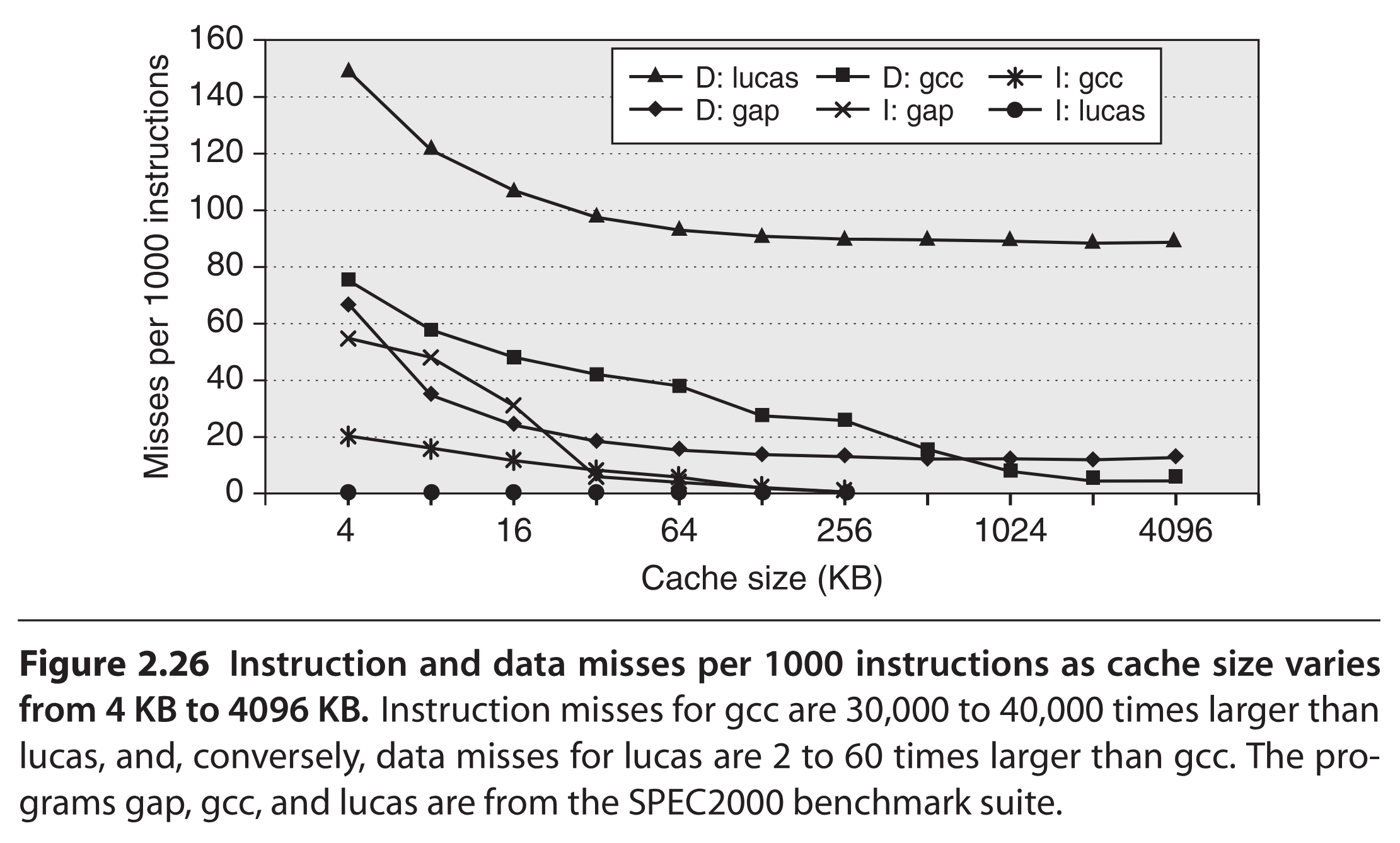

And what is true for data is also true for code (so organizing data and code properly, in compact and contiguous areas for each CPU Core, has dramatic consequences (eg: saving TLBs, the need to keep translation tables between the system physical memory and the process virtual memory address-space) – just like the CPU caches sizes: here, larger is better):

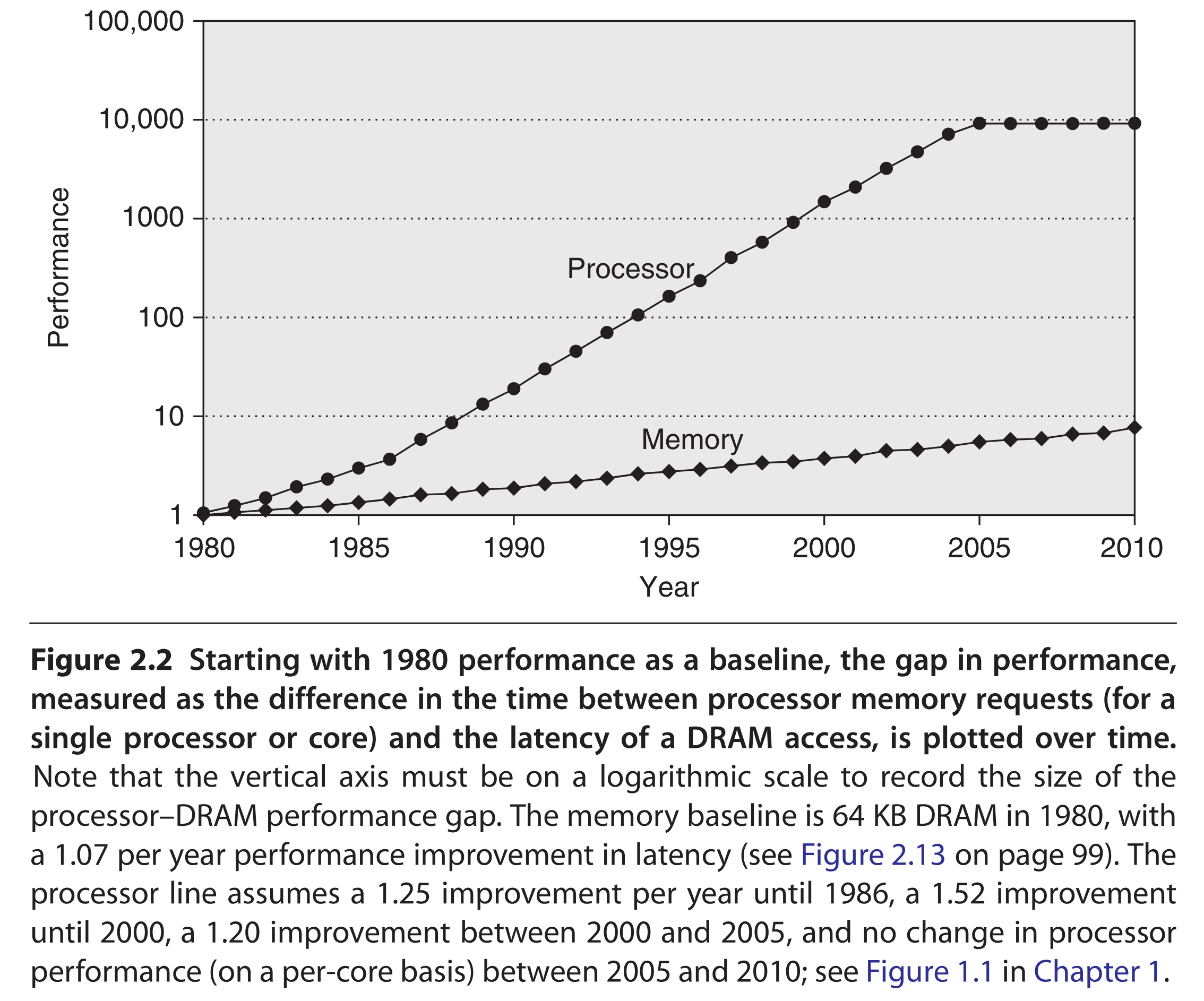

Even worse, CPUs are much, much faster than system memory, so, CPUs waste most of their time waiting for data to crunch (or code to execute) – hence the value of keeping things (code and data) altogether (rather than spending too much time at reloading the CPU caches):

How this helps to explain the difference of performance between G-WAN and NGINX

Already in late 2008 (before the first public release in 2009) G-WAN was faster than NGINX on a single CPU Core (or single thread). The code was just better written, simpler, less convoluted.

On my 2008 Intel Xeon 6-Core CPU, I could already appreciate how much better G-WAN was performing, due to better thread isolation (NGINX uses shared memory between several worker processes).

At this time, since the GLibC did not offer memory pools (nor per-thread allocation) I have added these features to G-WAN. This reached a new level with SLIMalloc in 2020 because SLIMalloc was designed since day one to perform on single-core CPUs, and to scale on multicore systems.

Over the years, I rewrote G-WAN and replaced GLibC with the above constraints in mind: simpler, smaller, more efficient. The result is now much, much more visible than in 2009, because my code has improved, but also because Intel CPUs now embed many more CPU Cores – that execute code much faster.

G-WAN better respects the architecture of multicore CPUs than NGINX, so it runs hundreds of times faster. That's mere computer science.